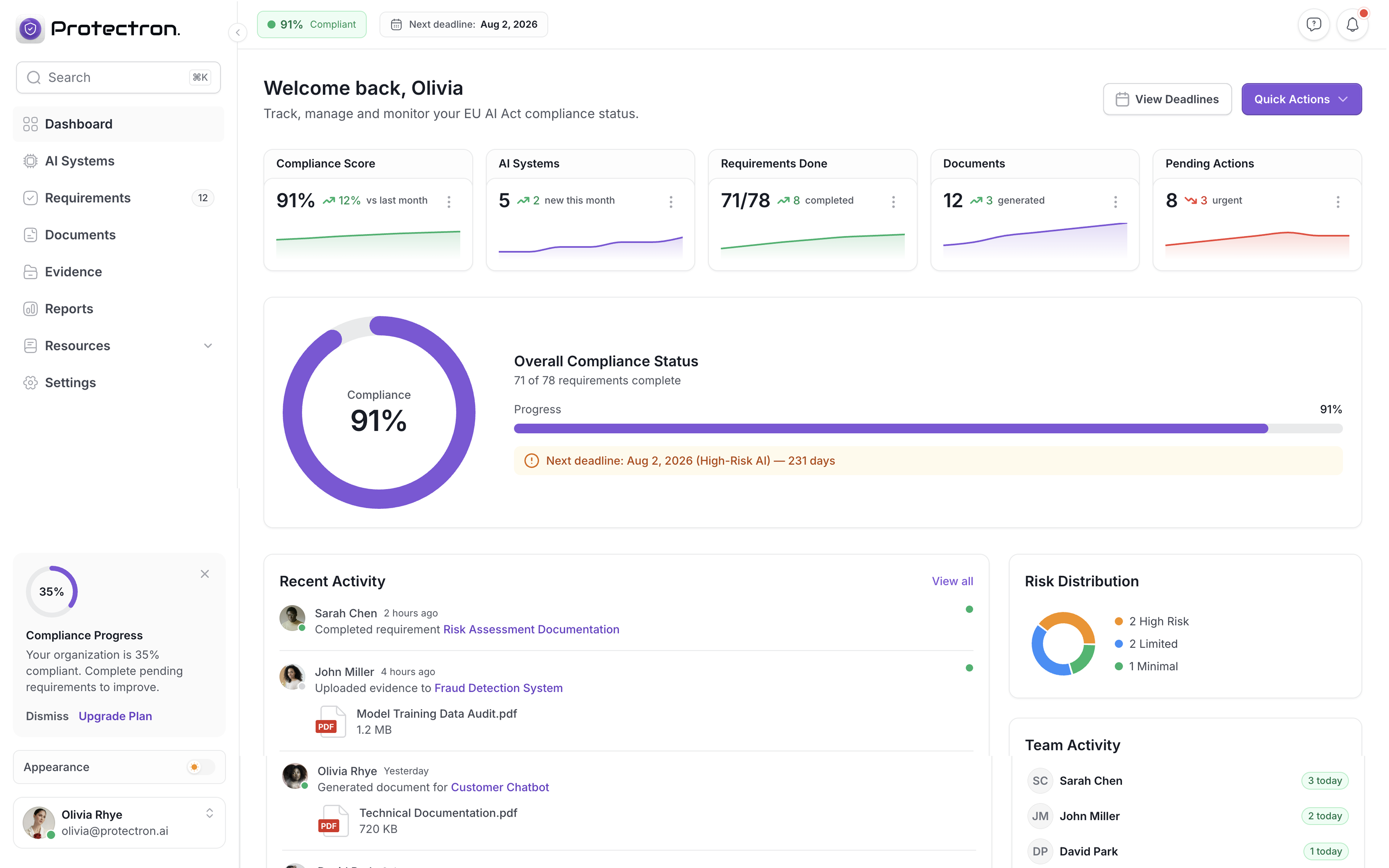

Compliance GuideFeatured

What is the EU AI Act? A Complete Guide for 2026

Everything you need to know about the world's first comprehensive AI regulation—and how it affects your business. Learn about risk classification, compliance requirements, and key deadlines.

January 15, 202624 min read

Read article