The EU AI Act (officially Regulation (EU) 2024/1689) is a landmark regulation that establishes rules for the development, deployment, and use of artificial intelligence across the European Union. It entered into force on August 1, 2024, with different provisions taking effect between February 2025 and August 2027.

Here's what you need to know at a glance:

- What it regulates: AI systems placed on the EU market or used within the EU

- Who it affects: Providers (developers), deployers (users), importers, and distributors of AI systems

- How it works: Risk-based approach with four tiers—unacceptable, high, limited, and minimal risk

- Key deadline: August 2, 2026 for most high-risk AI system requirements

- Maximum penalty: €35 million or 7% of global annual turnover

If you're a startup founder, CTO, or compliance officer wondering whether your AI product falls under this regulation, the short answer is: probably yes, if you serve EU customers.

What is the EU AI Act?

The EU AI Act is the European Union's comprehensive regulatory framework for artificial intelligence. Formally adopted on March 13, 2024, and published in the Official Journal on July 12, 2024, it represents the world's first attempt by a major regulatory body to create binding rules specifically for AI systems.

At its core, the EU AI Act aims to:

- Protect fundamental rights: Ensure AI systems don't harm individuals' health, safety, privacy, or other fundamental rights

- Promote trustworthy AI: Establish clear standards for transparency, accountability, and human oversight

- Prevent market fragmentation: Create uniform rules across all 27 EU member states

- Foster innovation: Balance regulation with support for AI development, especially for smaller companies

- Address emerging risks: Create mechanisms to respond to new AI applications and risks as they emerge

The regulation takes a risk-based approach, meaning the obligations placed on AI systems vary based on the potential harm they could cause. Some AI applications are banned entirely, others face strict requirements, and many remain largely unregulated.

The Legal Foundation

The EU AI Act is a regulation, not a directive. This distinction matters:

- Regulations apply directly and uniformly across all EU member states without requiring national implementation

- Directives must be transposed into national law, which can lead to variations between countries

This means that from the moment each provision takes effect, it becomes legally binding throughout the EU. Companies cannot rely on individual member states having different interpretations or enforcement approaches.

The Act sits within the EU's broader digital strategy, alongside:

- The General Data Protection Regulation (GDPR) for data privacy

- The Digital Services Act (DSA) for online platforms

- The Digital Markets Act (DMA) for large tech gatekeepers

- The Data Act for data sharing and access

Why Does the EU AI Act Exist?

The EU AI Act emerged from growing concerns about the rapid deployment of AI systems across society without adequate safeguards. Several factors drove the need for regulation:

1. Protecting Fundamental Rights

AI systems increasingly make or influence decisions that significantly impact people's lives:

- Hiring and recruitment algorithms that determine who gets job interviews

- Credit scoring systems that decide loan approvals

- Healthcare AI that influences diagnoses and treatment recommendations

- Law enforcement tools that affect criminal justice outcomes

Without regulation, these systems can embed and amplify biases, operate without transparency, and make consequential decisions without meaningful human oversight.

2. Addressing the "Black Box" Problem

Many AI systems, particularly those using deep learning, operate in ways that even their creators struggle to explain. This opacity creates problems when:

- Individuals can't understand why they were denied a job, loan, or service

- Companies can't audit their own systems for bias or errors

- Regulators can't assess whether systems comply with existing laws

The EU AI Act mandates transparency and explainability requirements to address this challenge.

3. Preventing a "Race to the Bottom"

Without common standards, companies might compete on cost by cutting corners on safety, testing, and oversight. The EU AI Act establishes a floor of requirements that apply to all market participants, ensuring responsible companies aren't disadvantaged.

4. The "Brussels Effect"

The EU has a history of setting global regulatory standards. Just as GDPR became a de facto global privacy standard, the EU AI Act is expected to influence AI regulation worldwide. Companies operating globally often find it easier to adopt a single compliance standard rather than maintaining different practices for different markets.

5. Building Public Trust

Surveys consistently show public concern about AI systems, particularly regarding privacy, bias, and autonomous decision-making. By establishing clear rules and enforcement mechanisms, the EU AI Act aims to build confidence that AI can be used safely and ethically.

Who Does the EU AI Act Apply To?

The EU AI Act has broad territorial scope, meaning it affects organizations far beyond the EU's borders.

The Act Applies To:

1. Providers (Developers)

Any natural or legal person that:

- Develops an AI system or has one developed on their behalf

- Places the AI system on the market or puts it into service under their own name or trademark

This includes companies that build AI products, train AI models, or create AI-powered features.

2. Deployers (Users)

Any natural or legal person that uses an AI system under their authority for professional purposes. This means:

- Companies using AI recruitment software

- Banks deploying credit scoring algorithms

- Healthcare providers using diagnostic AI

- Any business using AI tools in their operations

3. Importers

EU-based entities that place AI systems from non-EU providers on the EU market.

4. Distributors

Entities in the supply chain (other than providers or importers) that make AI systems available on the EU market.

5. Product Manufacturers

Entities that place products containing AI systems on the market under their own name or trademark.

Extraterritorial Reach

Here's the crucial point for non-EU companies: The EU AI Act applies regardless of where you're located if:

- Your AI system is placed on the EU market

- Your AI system is used within the EU

- The output of your AI system is used within the EU

This means a US-based startup selling AI-powered HR software to European companies must comply. A UK company post-Brexit serving EU customers must comply. An Asian AI provider whose tools are used by EU businesses must comply.

Who Is Exempt?

The Act does not apply to:

- AI systems developed or used exclusively for military, defense, or national security purposes

- AI systems used for scientific research and development (before market placement)

- Personal, non-professional use of AI systems

- AI systems released under free and open-source licenses (with important exceptions for high-risk systems)

- Public authorities in third countries and international organizations (unless outputs affect EU persons)

How Does the Risk-Based Approach Work?

The EU AI Act categorizes AI systems into four risk tiers, with obligations proportional to the potential harm:

| Risk Level | Obligations | Examples |

|---|---|---|

| Unacceptable | Prohibited | Social scoring, manipulative AI, certain biometrics |

| High | Strict requirements | HR/recruitment, credit scoring, healthcare |

| Limited | Transparency only | Chatbots, emotion recognition, deepfakes |

| Minimal | No obligations | Spam filters, AI video games, most current AI |

This pyramid structure means that most AI systems—perhaps 85-90% of those currently deployed—fall into the minimal risk category and face no specific obligations under the Act. However, if your AI system falls into the high-risk category, compliance requirements are substantial.

Why Risk-Based?

The EU chose this approach to:

- Focus resources on AI applications that pose genuine risks

- Avoid over-regulation of beneficial, low-risk AI applications

- Provide clarity for businesses about their obligations

- Enable flexibility as AI technology evolves

What AI Systems Are Prohibited?

The EU AI Act identifies certain AI applications as posing unacceptable risk to fundamental rights. These are banned entirely from February 2, 2025.

Prohibited AI Practices Include:

1. Manipulative AI Systems

AI that deploys subliminal techniques, manipulative or deceptive practices to distort behavior and impair informed decision-making, causing significant harm.

2. Exploitation of Vulnerabilities

AI systems that exploit vulnerabilities related to age, disability, or socio-economic circumstances to distort behavior and cause significant harm.

3. Social Scoring by Public Authorities

AI systems that evaluate or classify individuals based on their social behavior or personal traits, leading to detrimental treatment unrelated to the original data collection context.

4. Predictive Policing Based Solely on Profiling

AI systems that make risk assessments of individuals to predict criminal offenses based solely on profiling or personality traits (without objective, verifiable facts).

5. Untargeted Facial Recognition Database Scraping

Creating facial recognition databases through untargeted scraping of images from the internet or CCTV footage.

6. Emotion Recognition in Workplaces and Schools

AI systems that infer emotions of employees in workplaces or students in educational institutions (with narrow exceptions for safety or medical purposes).

7. Biometric Categorization Inferring Sensitive Attributes

AI that categorizes individuals based on biometric data to infer race, political opinions, religious beliefs, sexual orientation, etc.

8. Real-Time Remote Biometric Identification in Public Spaces

Use by law enforcement of real-time biometric identification in publicly accessible spaces (with narrow exceptions for serious crimes, missing persons, and terrorist threats—subject to prior judicial authorization).

What This Means for Businesses

If your AI system falls into any of these categories, you must:

- Cease using it before February 2, 2025

- Remove it from the EU market

- Not develop new systems with these capabilities

The penalty for prohibited AI practices is the highest tier: up to €35 million or 7% of global annual turnover.

What Are High-Risk AI Systems?

High-risk AI systems face the most extensive compliance requirements. They're classified as high-risk through two pathways:

Pathway 1: Safety Components in Regulated Products (Annex I)

AI systems that are:

- Used as a safety component of a product, OR

- Are themselves a product

AND are covered by EU harmonization legislation listed in Annex I, including:

- Medical devices

- In vitro diagnostic medical devices

- Civil aviation

- Motor vehicles

- Agricultural vehicles

- Marine equipment

- Rail systems

- Machinery

- Toys

- Personal protective equipment

- Lifts

- Equipment for explosive atmospheres

- Radio equipment

- Pressure equipment

For these systems, the AI Act works alongside existing product safety regulations.

Pathway 2: Specific Use Cases (Annex III)

AI systems used in the following areas are automatically classified as high-risk:

1. Biometrics (where permitted by law)

- Remote biometric identification

- Biometric categorization by sensitive attributes

- Emotion recognition

2. Critical Infrastructure

- Safety components in management of critical digital infrastructure

- Road traffic and supply of water, gas, heating, and electricity

3. Education and Vocational Training

- Determining access to educational institutions

- Evaluating learning outcomes

- Assessing appropriate level of education

- Monitoring prohibited behavior during tests

4. Employment, Workers Management, and Access to Self-Employment

- Recruitment and selection (CV screening, filtering, evaluating)

- Decisions on promotion, termination, task allocation

- Monitoring and evaluating work performance

5. Access to Essential Private and Public Services

- Evaluating eligibility for public assistance benefits

- Creditworthiness assessment for loans

- Risk assessment and pricing for life and health insurance

- Evaluating and classifying emergency calls

- Emergency healthcare patient triage

6. Law Enforcement (where permitted by law)

- Individual risk assessments for criminal offenses

- Polygraphs and similar tools

- Evaluating evidence reliability

- Profiling during investigations

7. Migration, Asylum, and Border Control

- Polygraphs and similar tools

- Risk assessments (security, irregular migration, health)

- Examining applications for visas, residence permits, asylum

- Identifying individuals

8. Administration of Justice and Democratic Processes

- Researching and interpreting facts and law

- Applying law to facts

- Alternative dispute resolution

- Influencing voting behavior (but not tools for organizing campaigns)

The High-Risk Exception

An important nuance: AI systems in Annex III categories are not classified as high-risk if they meet ALL of the following conditions:

- Perform a narrow procedural task

- Improve the result of a previously completed human activity

- Detect decision-making patterns without replacing human assessment

- Perform a preparatory task to a relevant assessment

Even if you believe your system qualifies for this exception, you must document your assessment and register the system in the EU database before placing it on the market.

What Are Limited and Minimal Risk AI Systems?

Limited Risk AI Systems

These systems have transparency obligations only. Users must be informed that they're interacting with AI. This category includes:

1. AI Systems Interacting with Humans

Chatbots, virtual assistants, and AI customer service must disclose their artificial nature—unless it's already obvious from the context.

2. Emotion Recognition and Biometric Categorization

When permitted, these systems must inform individuals that they're being subjected to such systems.

3. AI-Generated Content (Including Deepfakes)

Content generated or manipulated by AI (images, audio, video, text) must be labeled as artificially generated or manipulated. This includes:

- AI-generated images

- Synthetic audio (voice cloning)

- Deepfake videos

- AI-written text that simulates human authorship on matters of public interest

Exception: Artistic, satirical, or creative works where the AI nature is part of the creative concept, and content used for legitimate purposes like crime prevention.

Minimal Risk AI Systems

The vast majority of AI systems fall here and face no specific obligations under the EU AI Act. Examples include:

- Spam filters

- AI-powered video games

- Inventory management systems

- Recommendation algorithms (in most cases)

- Basic predictive maintenance

- Most internal business automation

However, companies can voluntarily adopt codes of conduct and best practices for these systems.

What About General Purpose AI (GPAI)?

The EU AI Act created a separate category for General Purpose AI models—foundation models like GPT-4, Claude, Gemini, Llama, and similar large language models that can be adapted for many different tasks.

Definition

A GPAI model is an AI model that:

- Is trained with a large amount of data using self-supervision at scale

- Displays significant generality

- Can competently perform a wide range of distinct tasks

- Can be integrated into various downstream systems or applications

GPAI Provider Obligations (From August 2, 2025)

All GPAI model providers must:

- Maintain technical documentation including training and testing processes

- Provide information to downstream providers integrating the model

- Comply with EU copyright law and respect opt-out mechanisms

- Publish a detailed summary of training data content

GPAI Models with Systemic Risk

GPAI models are classified as having systemic risk if they:

- Have high impact capabilities (assessed based on benchmarks and evaluations), OR

- Exceed a computational threshold of 10^25 FLOPs in training

Providers of systemic risk GPAI models must additionally:

- Perform model evaluations including adversarial testing

- Assess and mitigate systemic risks

- Track and report serious incidents

- Ensure adequate cybersecurity protection

What This Means for Companies Using GPAI

If you're building applications on top of GPT-4, Claude, or similar models:

- The GPAI provider (OpenAI, Anthropic, Google, etc.) handles GPAI-specific compliance

- You are responsible for your application's compliance as either a provider or deployer

- If your application is high-risk, you must ensure the upstream GPAI provider gives you sufficient information to comply

Key Compliance Requirements

For high-risk AI systems, providers must meet extensive requirements across several areas:

1. Risk Management System (Article 9)

Establish and maintain a risk management system throughout the AI system's lifecycle:

- Identify and analyze known and foreseeable risks

- Estimate and evaluate risks from intended use and reasonably foreseeable misuse

- Adopt suitable risk management measures

- Test the system to ensure consistent performance

2. Data Governance (Article 10)

For systems trained with data:

- Use relevant, representative, and accurate training, validation, and testing data

- Implement appropriate data governance practices

- Address bias that could affect health, safety, or fundamental rights

- Maintain data sets or records about data sets

3. Technical Documentation (Article 11)

Create and maintain comprehensive documentation including:

- General description of the system

- Design specifications and development methodology

- Monitoring, functioning, and control mechanisms

- Risk management procedures

- Applied harmonized standards or other specifications

- Description of hardware requirements

4. Record-Keeping (Article 12)

Implement automatic logging that enables:

- Tracing the system's operation throughout its lifecycle

- Monitoring for compliance

- Post-market surveillance

Logs must be kept for an appropriate period (minimum 6 months unless otherwise required).

5. Transparency and Information (Article 13)

Design and develop systems to ensure:

- Operation is sufficiently transparent for deployers

- Deployers can interpret outputs and use appropriately

- Accompany systems with instructions for use including intended purpose, performance metrics, known limitations, and maintenance requirements

6. Human Oversight (Article 14)

Design systems to allow for effective human oversight:

- Enable individuals to understand capabilities and limitations

- Allow monitoring of operation

- Enable interpretation of outputs

- Provide ability to decide not to use the system or override outputs

- Enable intervention to stop the system

7. Accuracy, Robustness, and Cybersecurity (Article 15)

Ensure AI systems:

- Achieve appropriate accuracy levels declared in documentation

- Are resilient against errors, faults, and inconsistencies

- Are resilient against attempts by unauthorized third parties to exploit vulnerabilities

- Implement adequate cybersecurity measures

8. Quality Management System (Article 17)

Providers must establish a quality management system including:

- Strategy for regulatory compliance

- Design and development procedures

- Testing and validation procedures

- Data management specifications

- Risk management procedures

- Post-market monitoring system

- Incident reporting procedures

- Communication with authorities and stakeholders

9. Conformity Assessment (Article 43)

Before placing a high-risk AI system on the market:

- Conduct a conformity assessment procedure

- Either self-assessment or third-party assessment depending on the system type

- Draw up an EU declaration of conformity

- Affix the CE marking

10. Registration (Article 49)

Register the AI system in the EU database before placing it on the market or putting it into service.

EU AI Act Timeline: Important Dates

The EU AI Act doesn't apply all at once. Here's the phased implementation:

| Date | What Happens |

|---|---|

| August 1, 2024 | EU AI Act enters into force |

| February 2, 2025 | Prohibited AI practices banned; AI literacy obligations apply |

| August 2, 2025 | GPAI rules apply; governance structure established; penalties for GPAI violations |

| August 2, 2026 | Main deadline: All other provisions apply, including high-risk AI system requirements |

| August 2, 2027 | Extended deadline for high-risk AI systems that are safety components of products already covered by other EU legislation (Annex I products) |

What Should You Do Now?

Immediately (Before February 2025):

- Audit AI systems for prohibited practices

- Cease any prohibited AI uses

- Begin AI literacy training for relevant staff

By August 2025:

- Understand GPAI obligations if you develop or significantly modify foundation models

- Monitor guidance from the AI Office

By August 2026:

- Complete risk classification of all AI systems

- Implement required documentation, testing, and governance for high-risk systems

- Establish human oversight mechanisms

- Register high-risk systems in EU database

- Conduct conformity assessments

Penalties for Non-Compliance

The EU AI Act establishes a tiered penalty structure similar to GDPR:

Maximum Fines

| Violation Type | Maximum Fine |

|---|---|

| Prohibited AI practices | €35 million or 7% of global annual turnover (whichever is higher) |

| High-risk AI system obligations | €15 million or 3% of global annual turnover |

| Other violations | €7.5 million or 1.5% of global annual turnover |

| Supplying incorrect information | €7.5 million or 1% of global annual turnover |

SME and Startup Considerations

For SMEs and startups, the administrative fine caps are:

- The lower of the percentage of turnover OR the fixed amount

- This provides some protection for smaller companies

Enforcement

Enforcement is handled by:

- National market surveillance authorities in each EU member state

- The European Commission's AI Office for GPAI model providers

- The European Data Protection Supervisor for EU institutions

Authorities can also:

- Order corrective actions

- Restrict, prohibit, or recall AI systems from the market

- Order destruction of non-compliant systems

How the EU AI Act Compares to GDPR

If you've dealt with GDPR compliance, you'll recognize some familiar themes in the EU AI Act:

| Aspect | GDPR | EU AI Act |

|---|---|---|

| Scope | Personal data processing | AI systems (regardless of data type) |

| Territorial reach | Extraterritorial | Extraterritorial |

| Risk approach | Risk-based processing requirements | Risk-based system classification |

| Documentation | Records of processing | Technical documentation |

| Impact assessments | DPIA | Fundamental rights impact assessment |

| Transparency | Privacy notices | Instructions for use, user disclosure |

| Rights | Data subject rights | Right to explanation for AI decisions |

| Maximum fines | €20M or 4% turnover | €35M or 7% turnover |

| Enforcement | National DPAs | National AI authorities + AI Office |

Key Differences

- The AI Act is broader than data processing: It applies even when no personal data is involved

- Risk is assessed at system level: Not just at processing activity level

- Product safety focus: The AI Act draws heavily from product safety regulation

- Higher maximum penalties: The ceiling is significantly higher than GDPR

Leveraging GDPR Compliance

If you're already GDPR-compliant, you have a head start:

- Accountability frameworks can be extended to AI governance

- DPIA processes can inform fundamental rights impact assessments

- Documentation practices provide a foundation for technical documentation

- Vendor management processes can incorporate AI-specific due diligence

However, GDPR compliance alone is not sufficient for AI Act compliance.

Special Provisions for SMEs and Startups

Recognizing the compliance burden, the EU AI Act includes several support measures for smaller companies:

Regulatory Sandboxes (Article 57)

Each EU member state must establish at least one AI regulatory sandbox by August 2026:

- Priority access for SMEs and startups

- Free of charge for small enterprises

- Test innovative AI under regulatory supervision

- Documentation from sandbox can support compliance

Reduced Fees

Conformity assessment fees must be proportionate and reduced for SMEs and startups based on:

- Size and resources

- Market demand

- Development stage

Dedicated Support Channels

The EU AI Act mandates:

- Dedicated communication channels for SME guidance

- Awareness-raising activities tailored to smaller organizations

- Support for participation in standards development

Proportionate Obligations

Some obligations are explicitly proportionate to size:

- GPAI Code of Practice includes separate KPIs for SMEs

- Quality management systems can be scaled appropriately

What Qualifies as an SME?

Under EU law:

- Medium enterprise: fewer than 250 employees AND (under €50M turnover OR under €43M balance sheet)

- Small enterprise: fewer than 50 employees AND (under €10M turnover OR balance sheet)

- Microenterprise: fewer than 10 employees AND (under €2M turnover OR balance sheet)

How to Prepare for EU AI Act Compliance

Getting compliant doesn't have to be overwhelming. Here's a practical roadmap:

Step 1: Inventory Your AI Systems

Start by understanding what AI you actually use:

- List all AI systems you develop, deploy, or use

- Include third-party AI tools and APIs

- Document the purpose and scope of each system

Step 2: Classify by Risk Level

For each AI system:

- Determine if it's prohibited (stop using immediately)

- Assess if it falls under Annex I or Annex III high-risk categories

- Document your classification reasoning

Step 3: Identify Your Role

For each AI system, determine whether you're:

- A provider (developer/maker)

- A deployer (user)

- An importer or distributor

Different roles have different obligations.

Step 4: Gap Analysis

For high-risk systems, assess current state against requirements:

- Risk management system

- Data governance

- Technical documentation

- Human oversight mechanisms

- Quality management system

Step 5: Remediation Plan

Prioritize gaps based on:

- Regulatory deadlines

- Risk level

- Effort required

- Business impact

Step 6: Implement Controls

Build or enhance:

- Documentation practices

- Testing and validation procedures

- Monitoring and logging systems

- Human oversight protocols

- Incident response procedures

Step 7: Ongoing Monitoring

Compliance is continuous:

- Monitor AI systems for compliance drift

- Update documentation as systems change

- Track regulatory guidance and standards

- Train staff on AI literacy and compliance

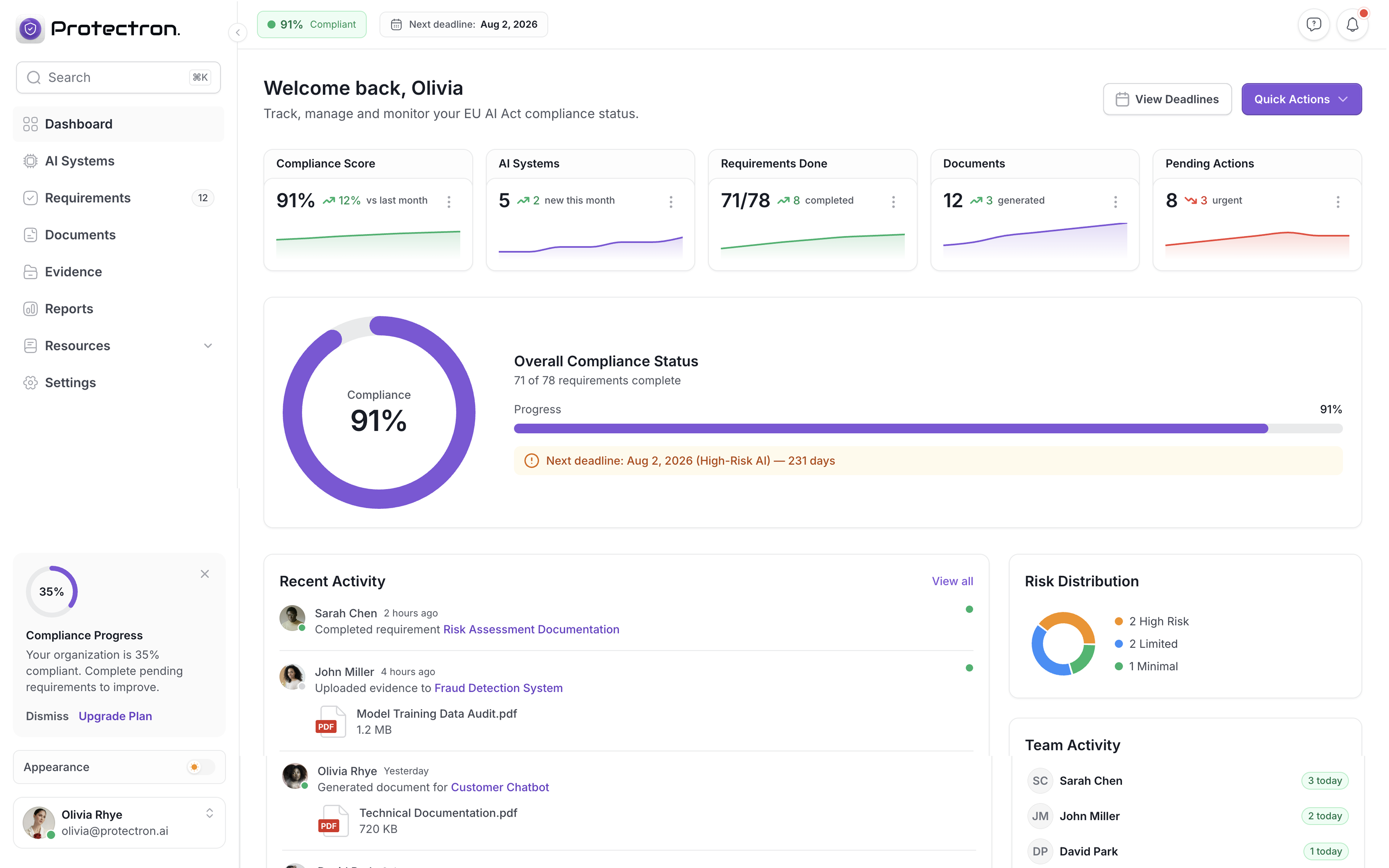

Using Compliance Software

Manual compliance tracking becomes unmanageable at scale. Consider purpose-built compliance platforms that can:

- Automate risk classification

- Generate required documentation

- Track compliance status across your AI portfolio

- Manage evidence and audit trails

- Alert you to regulatory updates

Frequently Asked Questions

Does the EU AI Act apply to US companies?

Yes. The EU AI Act has extraterritorial reach. If your AI system is placed on the EU market, used within the EU, or produces outputs used in the EU, you must comply—regardless of where your company is headquartered.

Does using ChatGPT or Claude make me subject to the AI Act?

It depends. The GPAI provider (OpenAI, Anthropic) handles their model's compliance. However, you're responsible for how you use these tools. If you build a high-risk application (like recruitment screening) using these APIs, your application must comply with high-risk requirements.

Is my chatbot considered high-risk?

Usually not. Customer service chatbots typically fall under "limited risk" and only require disclosure that users are interacting with AI. However, if your chatbot makes decisions about eligibility for services, credit, or employment, it could be high-risk.

Is recruitment/HR software automatically high-risk?

Yes. AI systems used for "recruitment and selection" are explicitly listed in Annex III. This includes CV screening, candidate ranking, interview analysis, and similar tools.

Is credit scoring AI high-risk?

Yes. AI systems used for "creditworthiness assessment" are explicitly listed in Annex III as high-risk.

What's the difference between a provider and deployer?

A provider develops an AI system or has one developed and places it on the market. A deployer uses an AI system under their authority for professional purposes. One company can be both—provider of their own AI and deployer of third-party AI.

Can I get an exemption as a small company?

No exemptions, but support exists. SMEs face the same rules but benefit from reduced fees, priority sandbox access, and proportionate obligations. The compliance requirements don't disappear, but they can be scaled appropriately.

When do I need to be compliant?

Most requirements apply from August 2, 2026. However, prohibited practices are already banned (February 2025), and GPAI rules apply from August 2025. Don't wait until the deadline—compliance work takes time.

How much does compliance cost?

It varies widely. Enterprise compliance programs can cost €50,000+ with consultants. DIY approaches require significant internal resources. Compliance software like Protectron offers an affordable middle ground starting at €99/month.

What's the relationship between EU AI Act and GDPR?

They're complementary. GDPR governs personal data processing. The AI Act governs AI systems regardless of data type. If your AI processes personal data (most do), you need both. GDPR compliance provides a foundation but doesn't satisfy AI Act requirements.

Do I need a conformity assessment for all AI systems?

Only for high-risk systems. Minimal and limited risk systems don't require conformity assessment. High-risk systems need either self-assessment or third-party assessment depending on their category.

Take the Next Step

The EU AI Act represents a significant shift in how AI is regulated globally. Whether you see it as an obstacle or an opportunity depends largely on how prepared you are.

The companies that act now will:

- Avoid last-minute compliance scrambles

- Build customer trust through demonstrated responsibility

- Gain competitive advantage in regulated markets

- Reduce risk of costly penalties and market restrictions

Don't wait until August 2026. Start your compliance journey today.

Need help with EU AI Act compliance? Start your free risk assessment and get a clear picture of your compliance status in minutes.